Amazon releases dataset for training robots

THE DATASET of images collected in an industrial setting features more than 190,000 objects, orders of magnitude more than previous datasets.

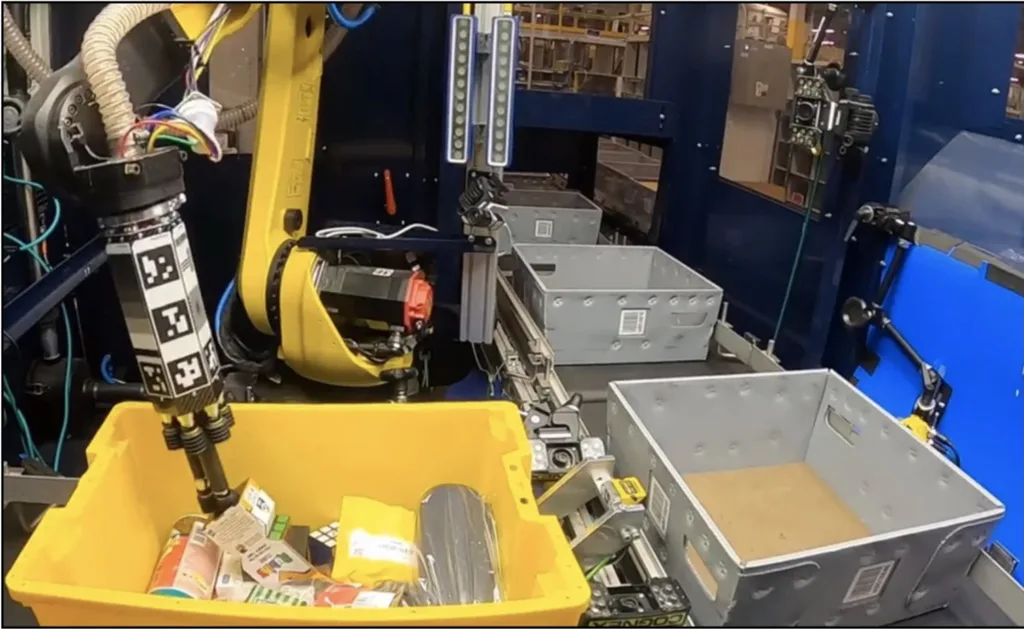

In an effort to improve the performance of robots that pick, sort, and pack products in warehouses, Amazon has publicly released the largest dataset of images captured in an industrial product-sorting setting. Where the largest previous dataset of industrial images featured on the order of 100 objects, the Amazon dataset, called ARMBench, features more than 190,000 objects. As such, it could be used to train pick and place robots.

The scenario in which the ARMBench images were collected involves a robotic arm that must retrieve a single item from a bin full of items and transfer it to a tray on a conveyor belt.

{EMBED(1256701)}

ARMBench contains image sets for three separate tasks: (1) object segmentation, or identifying the boundaries of different products in the same bin; (2) object identification, or determining which product image in a reference database corresponds to the highlighted product in an image; and (3) defect detection, or determining when the robot has committed an error, such as picking up multiple items rather than one or damaging an item during transfer.

The images in the dataset fall into three different categories: the pick image is a top-down image of a bin filled with items, prior to robotic handling; transfer images are captured from multiple viewpoints as the robot transfers an item to the tray; the place image is a top-down image of the tray in which the selected item is placed.

For more information, visit www.amazon.science